Biomanufacturing technology based on synthetic biology can create novel creations in a flexible and diverse manner that have not been before. However, due to the complexity of biological systems, researchers have to rely on repeated experiments to cultivate and screen engineering microorganisms with superior performance. To reduce the number of experiments and improve the efficiency of strain development, scientists attempt to use algorithmic models to predict production capacity.

However, the algorithm model must “learn” a large amount of data in advance to perform calculations. The more data it learns, the more accurate the calculation results. Therefore, how to quickly filter and summarize existing data is a prerequisite for all work.

Recently, a multidisciplinary research team from the University of Washington used natural language processing (NLP) tools to accelerate the mining of synthetic biology data. The relevant research papers have been published in the ACS Synthetic Biology journal.

In the paper, the research team used GPT-4 to extract and summarize information from 176 publications involving two types of yeast (Yarrowia lipolytica and Rhodosporium toruloides, hereinafter referred to as Y. lipolytica and R. toruloides).

With the help of GPT-4, the work time required to search and organize the same number of literature has been reduced from the original 400 hours to 40 hours. Moreover, based on the structured dataset and feature selection compiled by NLP tools, the trained machine learning model can more accurately predict the fermentation yield of engineered yeast.

(Source: ACS Synthetic Biology)

GPT accelerates data mining, taking 40 hours for 100 articles

Natural Language Processing (NLP) is a branch of artificial intelligence that can be used for large-scale text and data processing. Overall, this type of tool includes two functions: natural language understanding and natural language generation. That is to say, it can not only understand existing natural language texts, but also use natural language texts to express the researcher’s designated intentions.

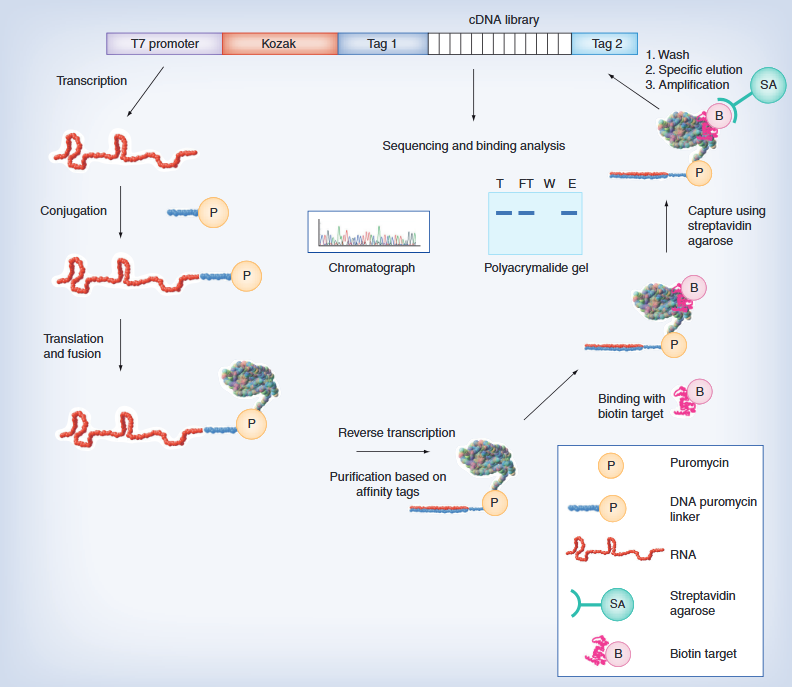

At the beginning of this year, OpenAI, an artificial intelligence research laboratory in the United States, released the GPT-4 language model. Based on this tool, researchers can quickly extract relevant biological process features and results from published papers, thereby achieving rapid database growth and being used for machine learning (ML).

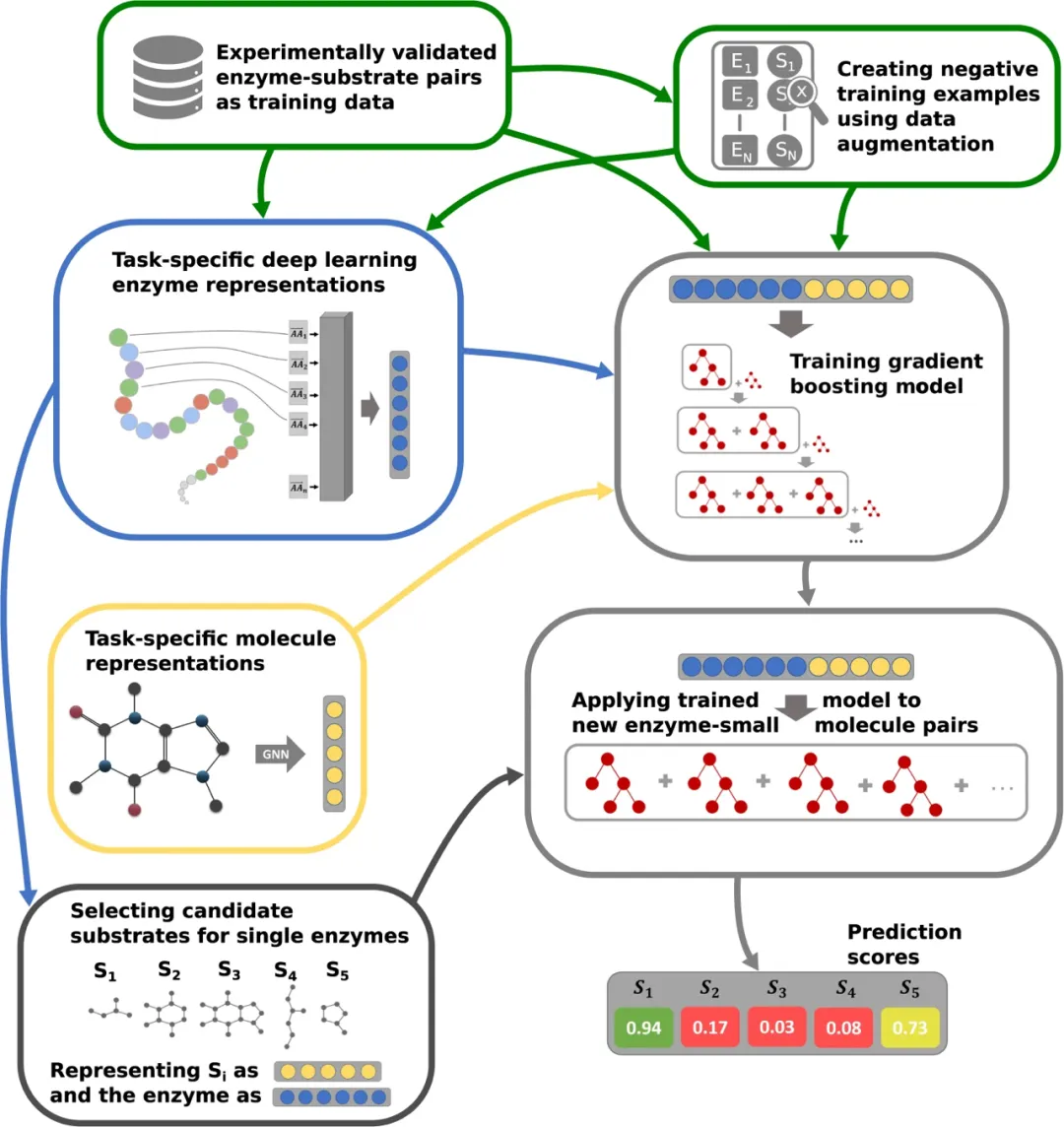

Using GPT-4 for Information Mining and Applying to AI (Source: ACS Synthetic Biology)

In this study, the research team used GPT-4 to extract information from articles on industrial production yeast Y. lipolytica, and manually transformed this information into data samples (i.e. instances). That is to say, each instance is an experimental data that associates the output (product yield) with the input (i.e. experimental characteristics). The characteristic variables also include a large amount of information such as biological process conditions, metabolic pathways, and genetic engineering methods. In this regard, achieving rapid data extraction without missing information will be an important indicator for evaluating the work of GPT-4.

Prior to this, the research team had manually collected information from approximately 100 papers related to Y. lipolytica, which required a well trained graduate student to work for over 400 hours. After using GPT-4, it obtained approximately 1670 additional data instances from 115 related papers within 40 hours.

To further test the applicability of GPT, the team compared manually extracted data with GPT extracted data by calculating feature importance, feature variance, and principal component analysis (PCA). The results indicate that the feature importance distribution of GPT extracted data is similar to that of manual extraction, indicating that the pattern followed by GPT extracted data is similar to that of manual extraction.

However, there are 19 features in the GPT dataset with higher feature variances than manually extracted datasets. Researchers point out that this is because GPT not only considers carbon source and cofactor costs when classifying data, but also clusters based on cultivation conditions and genetic engineering characteristics. That is to say, GPT-4 can capture more unique features in the paper and infer through complex contextual data, thereby generating less biased biological manufacturing instances.

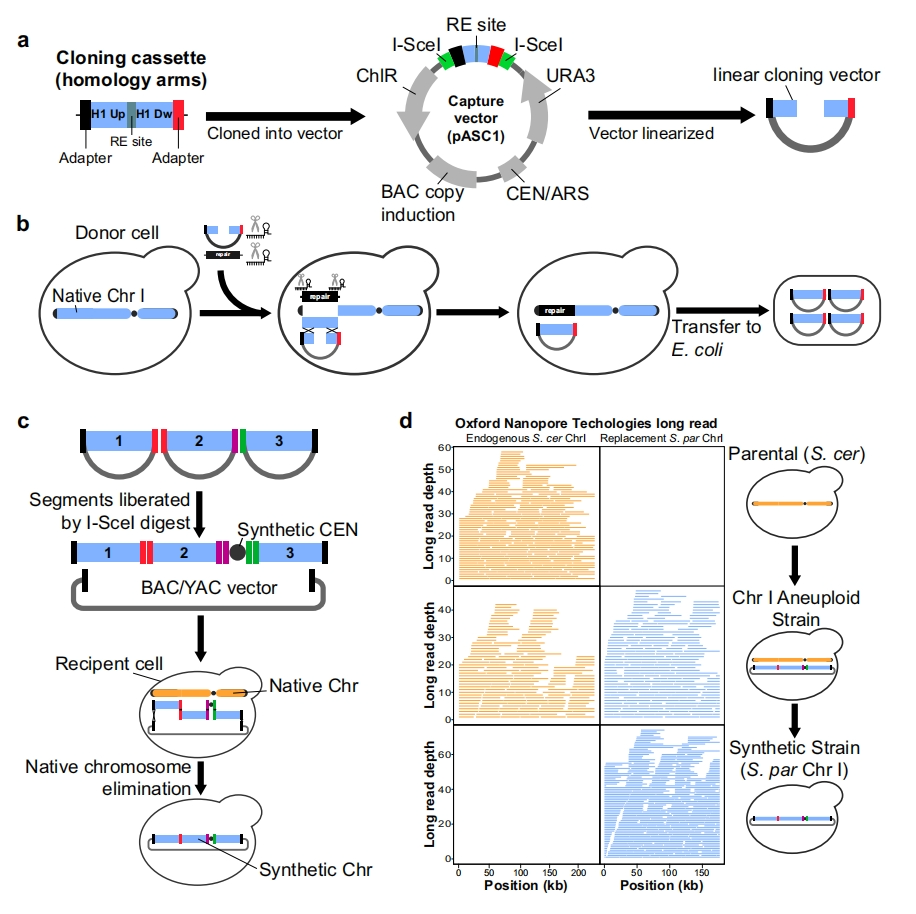

PCA Comparison of Manual and GPT Extraction Data (Source: ACS Synthetic Biology)

Afterwards, based on the data captured by GPT, the research team established a relevant database and used it to train the ML model for predicting the fermentation yield of Y. lipolytica. Compared with the published but not inputted yield data of Y. lipolytica in the database, the correlation coefficient (R2) between the predicted results using the random forest (RF) model and the actual yield is 0.86, which is the best among all tested algorithm models.

Revealing the potential impact of genetic engineering through transfer learning

In recent years, the new type of yeast R. toruloides has attracted attention due to its high fat content and high natural carotene production. However, literature on this yeast is very scarce and insufficient to establish a separate and usable database.

To address this issue, the research team adopted Transfer Learning (TL) technology to establish an algorithm model suitable for R. toruloides. The original intention of transfer learning method is to save time in manually annotating samples, allowing the model to transfer from existing labeled data (source domain data) to unlabeled data (target domain data), thereby training a model suitable for new targets.

Based on this study, using transfer learning technology, researchers were able to utilize the Y. lipolytica dataset (existing information) to reveal the potential impact of genetic engineering on fermentation yield.

Specifically, the research team extracted 366 fermentation data from 60 articles related to R. toruloides, which were modified to produce astaxanthin. However, on the one hand, there is a general lack of genetic engineering information in relevant papers; On the other hand, the fermentation yields of Y. lipotica and R. toruloides in the database are mostly in the gram/liter level, while the astaxanthin production of R. toruloides is in the milligram/liter level. The differences in order of magnitude increase the difficulty of predicting low yield products. Therefore, the correlation coefficient of its confirmatory yield prediction is only higher than 0.4, and the results are not accurate.

At this point, it is necessary to transfer relevant information from the Y. lipolytica database. The research team adopted two inductive learning methods: one is a neural network with a pre trained encoder decoder structure to study the effect of gene expression quantity on astaxanthin synthesis; Another approach is instance based TL methods to address the source target domain gap.

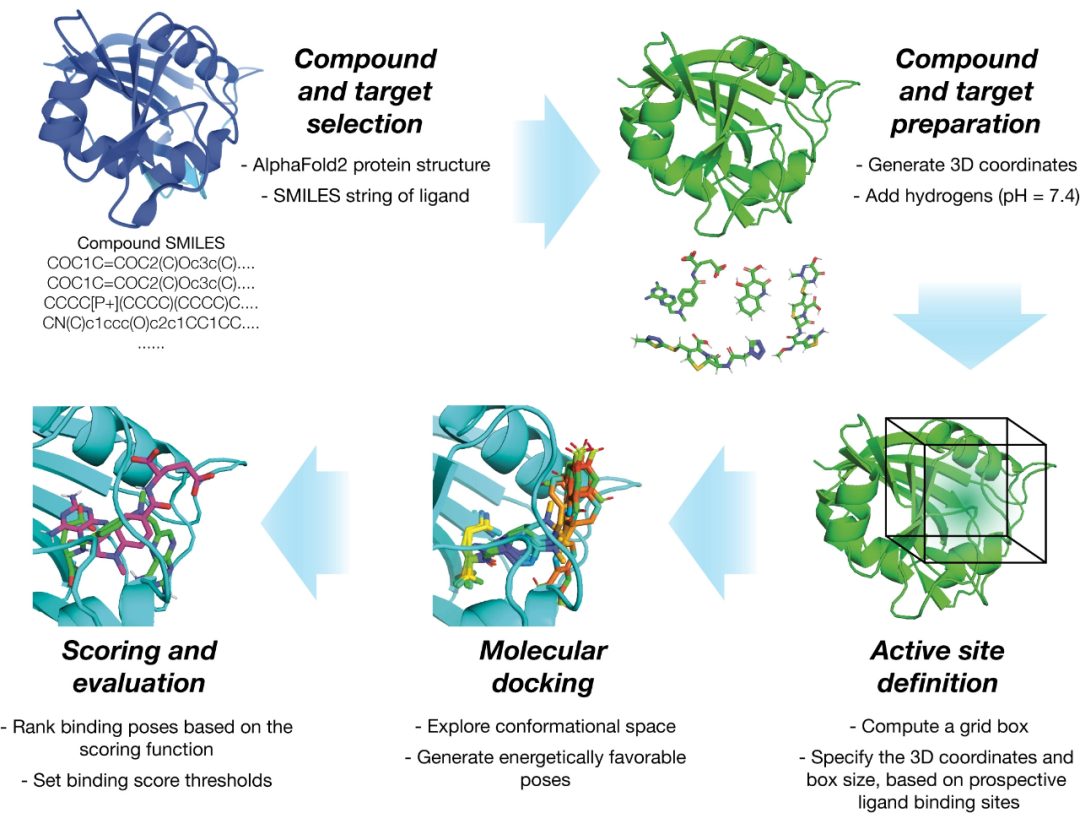

Using TL to Predict Astaxanthin Production in R. toruloides (Source: ACS Synthetic Biology)

In the new model, the training instances are labeled as Y. lipotica or R. toruloides, and the latter data is assigned three times the weight. Based on the data of the two species mentioned above, the new model predicts that the production of astaxanthin in the wild-type R. toruloides after process optimization should not exceed 4.2 mg/L. This result is comparable to the results of a recently published paper, in which the yield of R. toruloides astaxanthin in a shaking flask was 1.3 mg/L.

Afterwards, the algorithm model further predicts that gene expression can help improve the astaxanthin production of engineered yeast. If the six key genes are optimized, their average yield may reach 39.5 mg/L. It is worth noting that there is still considerable uncertainty in the predicted results. The team emphasizes that the RF model using instance migration methods can provide reasonable predictions when the database is incomplete.

Overall, this study aims to utilize GPT to achieve automated literature information and data mining, thereby supporting the application of machine learning in the field of synthetic biology. During this process, researchers can use GPT-4 to process a large amount of information to reduce the time spent on literature analysis. These experiences will help improve existing data processing processes and drive engineering practices, thereby promoting the further application of GPT and machine learning in the field of synthetic biology.

Related recommendations

Genetic Engineering and Gene Editing

Yeast Strain Modification

Strain Fermentation Monitoring

Strain Engineering for Astaxanthin